My career has been built on understanding how to design conversations that reveal truth—from crafting AI prompt frameworks for healthcare and legal applications, to collaborating with domain experts to understand their real workflows, to teaching myself iOS development and shipping three apps that solve real problems.

At Airia, I led research initiatives that shaped AI platform behavior across multiple industries. I designed conversation systems that had to be precise, compliant, and genuinely helpful. I worked directly with specialists—lawyers, educators, healthcare professionals—understanding their needs and expertise so I could represent them accurately.

As a Prompt Engineer, I've mastered the intersection of psychology, language, and technology: How do you ask a question that gets an honest answer? How do you structure information so it's clear and reliable? How do you handle complexity professionally?

This is precisely what qualitative research demands.

I'm trilingual (English, Portuguese, Spanish) with domain expertise spanning healthcare technology, legal compliance, education, and business. My background in product management, team leadership, and data analysis means I understand research in context—not just as isolated interviews, but as part of larger strategic decisions.

I bring both research rigor and real-world perspective.

I help people connect with AI in a way that feels natural, approachable, and genuinely helpful.

About me

Research & Analysis

→ Research Design | Conversation Architecture | Interview Methodology | Qualitative Analysis | Due Diligence Research | Expert Recruitment

Specialized Knowledge

→ Healthcare Technology | EdTech | Legal Compliance | Business Operations | AI Systems & Prompt Engineering | Product Strategy

Technical

→ iOS Development (Swift, Xcode) | SQL & Data Analysis | User Research | Cross-functional Project Management

Languages

→ English | Portuguese | Spanish

Hush Mind

Adaptive sound programs for focus and sleep

Most sound apps on the market — like Endel, Brain.fm, and BetterSleep — rely on loops. They repeat over time and can lose their effect. Hush Mind was created to be different: programs, not loops. Adaptive sound experiences that evolve to match focus and rest cycles.

My Role

As Product Manager:

Conducted competitive research analyzing market leaders (Endel, Brain.fm, BetterSleep) to identify gaps in adaptive vs. static sound experiences.

Defined the product roadmap and prioritized MVP features based on user research and behavioral insights.

Coordinated a cross-functional team across design and development, managing App Store readiness including ASO strategy and community engagement.

As Conversational AI Designer:

Wrote the prompts integrated directly into the developer's code, designing the conversational logic that powers Hush Mind's adaptive sound programs

Shaped the adaptive intelligence that personalizes sound experiences based on user goals, mood inputs, and session timing—creating dynamic, context-aware responses

Designed multi-turn conversation flows that feel natural and supportive, balancing user autonomy with intelligent recommendations.

Focus:

Competitive Research & Positioning: Researched competitors to ensure Hush Mind's position as adaptive, not repetitive—understanding user pain points with loop-based sound apps

MVP Delivery: Scoped core features (sound engine, sequencer, and UI/UX) based on user needs research and technical feasibility

Launch Preparation: Led testing, App Store optimization, and launch planning—including user research to refine messaging and positioning.

Outcome:

Hush Mind was positioned for release as a next-generation focus and sleep tool. By blending prompt engineering with product management, the app delivers a sound experience that feels alive, not static — setting it apart from traditional sound apps. The conversational AI design enables personalized, adaptive experiences that respond to individual user contexts—demonstrating the intersection of research insight and AI implementation.

Arii

AI doesn’t have to be a polite, boring assistant. Meet Arii—my fiery, funny AI persona designed to keep users on track with their health goals.

Creating Arii required deep user research to understand when people need encouragement versus accountability—and designing conversational responses that balanced helpfulness with honesty.

Research Insight:

Large language models are inherently polite... but sometimes, being helpful means being a little bold.

Through user testing and behavioral research, I discovered that personality makes AI advice not only effective, but unforgettable.

Bringing AI Personas to Life - Arii's Story

My Contributions

Product Strategy & Research Leadership:

Led research phase identifying user pain points with existing tools, competitive gaps, and feature priorities.

Defined product requirements based on research insights: non-technical interface, collaboration-first design, research-friendly testing.

Owned cross-functional collaboration with engineering and design to translate research into product roadmap.

Conversational Design Decisions:

In Arii's design, I gave her 'permission' to speak strongly when a user's choices went against their goals—calibrating tone based on user context and relationship stage.

Designed multi-turn conversation flows that adapted Arii's personality intensity based on user history, goal proximity, and demonstrated preferences.

Real-World Testing:

During testing, I asked if my husband having a hotdog with a beer was okay for his health plan. Arii's response?

"Step away from the hotdog and beer."

This testing phase revealed how conversational personality could transform behavioral nudges from generic advice into memorable, effective interactions—validating that empathy research + bold design creates stronger user outcomes than politeness alone.

Key Takeaway

We all laughed—and learned that personality can make AI advice not only effective, but unforgettable.

What this project demonstrated:

How user research shapes conversational tone and personality calibration.

The importance of iterative testing to find the right balance between supportive and direct.

That conversational AI design is as much psychology as technology—understanding when users need a friend versus a coach.

Education

Prompt design isn't just technical - it's personal.

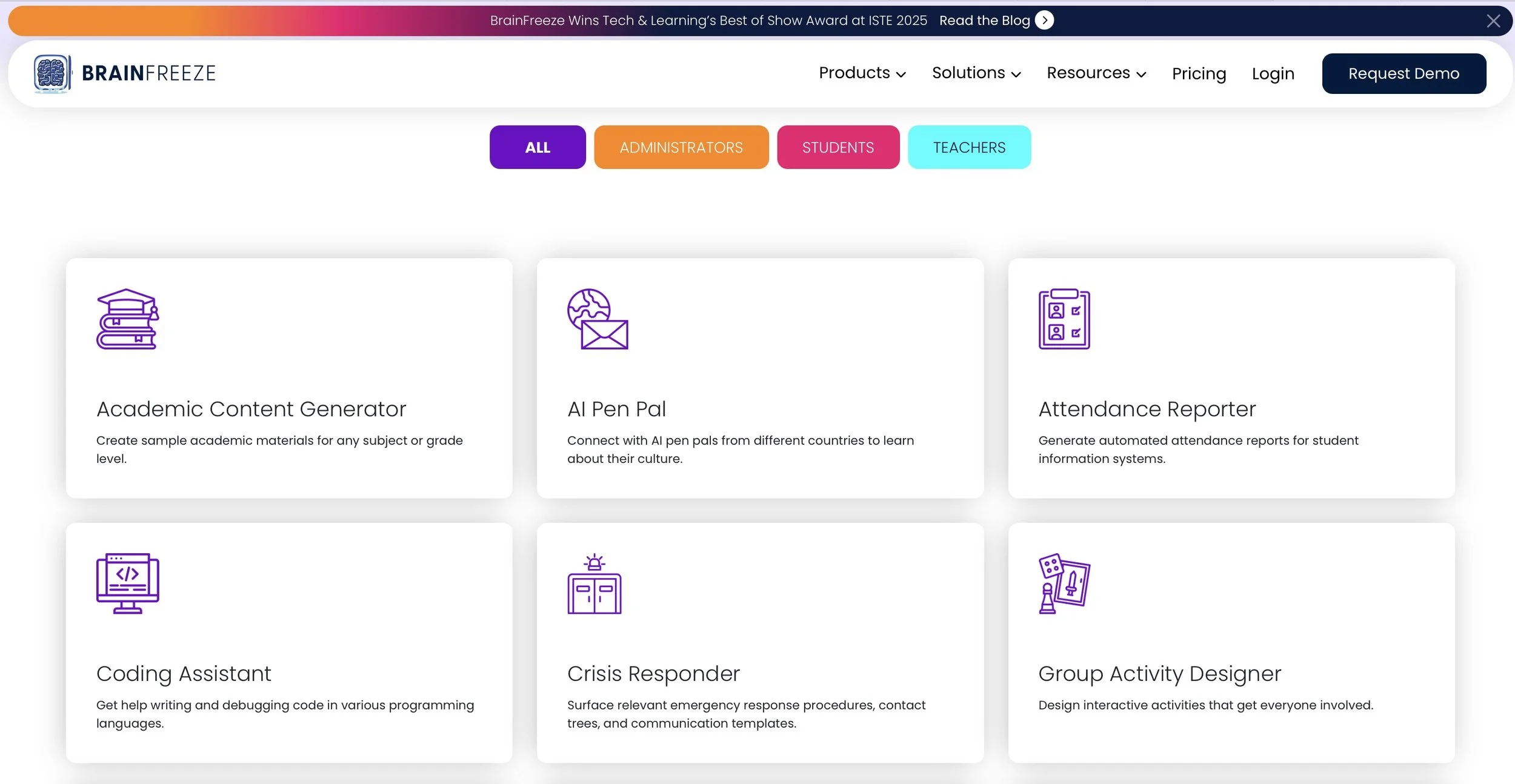

As both a parent and a prompt engineer, I had a unique lens when developing the prompt libraries for BrainFreeze, Airia's education platform. I wasn't just researching how AI should respond—I was imagining how I'd want it to talk to my own child.

This dual perspective shaped a research-driven design process: understanding child development stages, teacher workflows, and parent concerns before writing a single prompt.

My Contributions

Research & User Understanding:

Conducted stakeholder research across three distinct user groups: students (varied grade levels), teachers (classroom management needs), and parents (monitoring and support concerns).

Led early research that shaped the platform's voice—balancing encouragement, clarity, and adaptability across languages and grade levels.

Applied real-world parental instincts combined with iterative prompt testing across multiple AI models to ensure age-appropriate, culturally sensitive responses.

Conversational AI Design:

Built prompts that powered multilingual AI tutors and assistant personas, tailored to the learning needs of students, teachers, and parents.

Designed conversational flows for tutoring, study planning, and behavior support across diverse learning scenarios—from elementary homework help to high school exam prep.

Created adaptive dialogue systems that adjusted complexity, tone, and scaffolding based on student age, subject matter, and learning context.

Multi-Persona Architecture:

Developed distinct AI personas including:

Academic Content Generator (teacher support)

AI Pen Pal (cultural learning & language practice)

Coding Assistant (programming education)

Crisis Responder (student safety & well-being)

Group Activity Designer (collaborative learning)

Each persona required unique conversational rules, boundary conditions, and response patterns.

Key Achievements

What This Work Demonstrated:

How user research across multiple stakeholder groups (students, teachers, parents) creates more comprehensive conversational design.

The importance of developmental psychology in prompt engineering—what works for a 3rd grader doesn't work for a high schooler.

That multilingual conversational AI requires cultural research, not just translation—understanding how encouragement, authority, and learning differ across cultures.

How empathy-driven design (thinking like a parent) produces safer, more effective educational AI than purely technical approaches.

Platform Impact:

BrainFreeze's prompt library supports tutoring across multiple subjects, grade levels, and languages—demonstrating scalable conversational architecture.

The Crisis Responder persona showcases how thoughtful prompt design can handle sensitive, high-stakes conversations with appropriate escalation protocols.

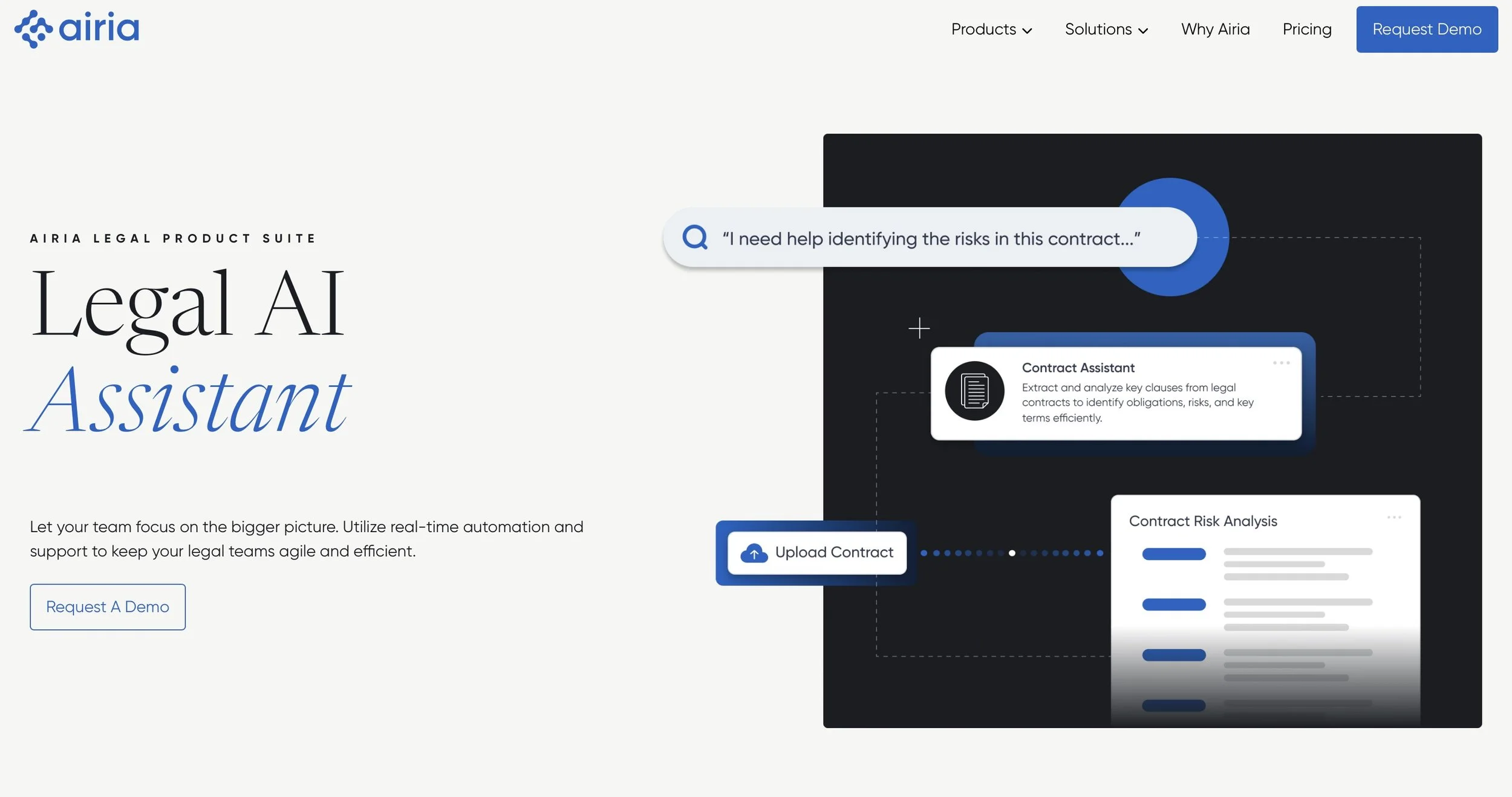

Legal

In legal work, clarity matters more than creativity.

Working with in-house attorneys and feedback from real legal clients, I created the prompt libraries for Airia's Legal AI Assistant, focused on compliance, consistency, and real-world usage.

This required deep research into legal workflows and risk management: understanding how lawyers think, what they fear about AI, and where automation could support (not replace) their expertise.

My Contributions

Expert Collaboration & Research:

Collaborated directly with two practicing lawyers to understand tone, terminology, and boundaries in legal AI communication—conducting iterative stakeholder interviews to validate conversational approaches.

Researched legal professional workflows, identifying where AI assistance adds value (contract drafting, clause explanation, risk analysis, citation support) versus where human judgment is non-negotiable.

Applied compliance-first design principles, ensuring every prompt avoided unauthorized practice of law while still providing meaningful support.

Specialized Conversational Design:

Created modular prompt templates for:

Contract drafting (generating initial language while preserving attorney review)

Clause explanation (translating legalese for non-lawyers)

Risk analysis (flagging potential issues without providing legal advice)

Citation support (research assistance with auditability)

All designed with auditability and safety as primary constraints.

Precision Language Engineering:

Focused on language that avoids hallucination and preserves intent—because in legal work, vague AI can be risky AI.

Took complex legal concepts and translated them into repeatable, controllable prompt flowsfor safe internal and external use.

Developed conversational guardrails that maintain helpful assistance while respecting attorney-client privilege, work product doctrine, and ethical boundaries.

What This Work Demonstrated

High-Stakes Conversational Design:

How to design AI systems for regulated, high-risk domains where mistakes have serious consequences.

The importance of subject matter expert validation in specialized conversational AI (legal, medical, financial).

That effective AI assistance requires understanding professional liability constraints, not just user needs.

Compliance-Driven Architecture:

Built prompt structures that provide value within ethical boundaries—AI can draft, but lawyers must approve; AI can flag risks, but lawyers decide strategy.

Designed audit trails into conversational flows for legal accountability.

Created fallback mechanisms: when legal complexity exceeds AI capability, the system escalates to human expertise.

Translation Capability:

Bridging technical AI teams with non-technical legal professionals who need reliability over innovation.

Making complex legal processes (contract analysis, citation research) accessible to non-lawyers without oversimplifying or creating liability.

Why Legal AI Required Different Research:

Legal professionals are skeptical adopters—they need proof of reliability before trust.

Through stakeholder interviews, learned that lawyers fear AI for good reasons: confidentiality breaches, unauthorized practice of law, hallucinated precedents.

This research shaped conservative design choices: AI assists but never decides, suggests but never advises, drafts but never finalizes.

Industry Context:

Legal AI is one of the fastest-growing sectors (contract automation, e-discovery, legal research).

My experience designing compliant, auditable conversational systems positions me to work across regulated industries (healthcare, finance, legal).

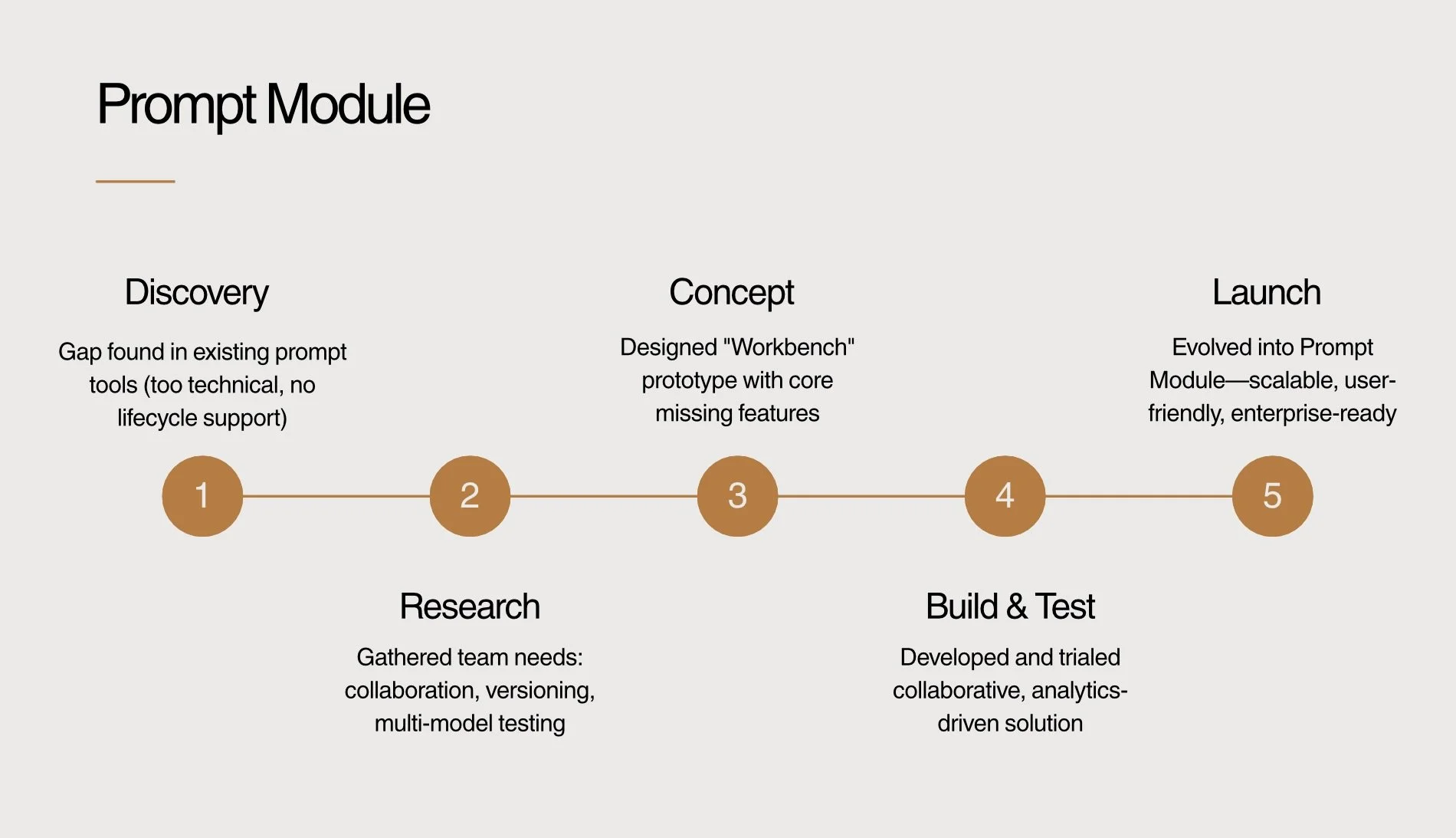

Prompt Module

The Gap in the Market: A Prompt Engineering Product Research Story

Problem Discovery & Research:

In mid-2024, our team conducted a landscape analysis of available prompt engineering tools (PromptMetheus, PromptKnit, PromptLayer, OpenAI Playground, Anthropic Console, etc.).

Through user research and stakeholder interviews, we identified three critical gaps:

Too technical for non-developers - Tools required coding knowledge or ML familiarity.

Limited collaborative features - No multi-turn conversation support or real-time team collaboration.

No lifecycle management - Organizations lacked version history, audit trails, or prompt traceability for compliance.

This research revealed a clear market opportunity: teams needed a research-friendly, non-technical prompt engineering environment that prioritized collaboration, governance, and real-world workflows.

Strategic Goal:

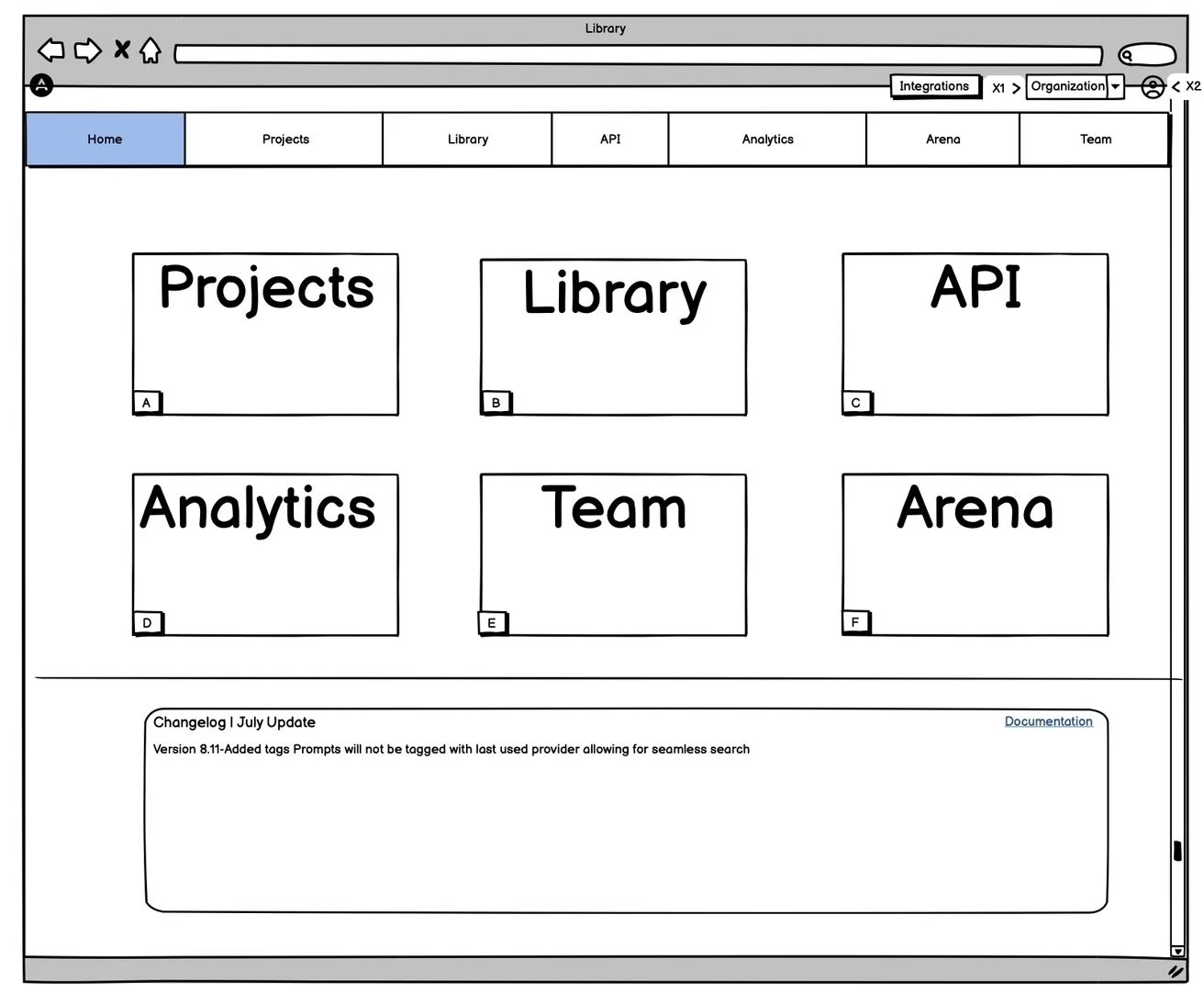

Design an internal tool (Workbench) that solved these discovered gaps:

For Teams:

Enable collaborative prompt design and testing across team members with different technical backgrounds

Provide version history and audit trails for compliance and research traceability

Support multi-model testing across providers (OpenAI, Anthropic, LLaMA, etc.)

For Organizations:

Allow import of prior conversations to evaluate prompt revisions in real context—enabling research validation

Support variable systems, function libraries, and file inputs—moving beyond simple text prompts

Create prompt lifecycle management—from development → testing → deployment → monitoring

My Contributions

Product Strategy & Research Leadership:

Led research phase identifying user pain points with existing tools, competitive gaps, and feature priorities.

Defined product requirements based on research insights: non-technical interface, collaboration-first design, research-friendly testing.

Owned cross-functional collaboration with engineering and design to translate research into product roadmap.

Workbench Features I Designed:

Collaborative Workspace

Real-time team collaboration on prompt design

Role-based access (developers, researchers, compliance reviewers)

Conversation threading for iterative prompt refinement

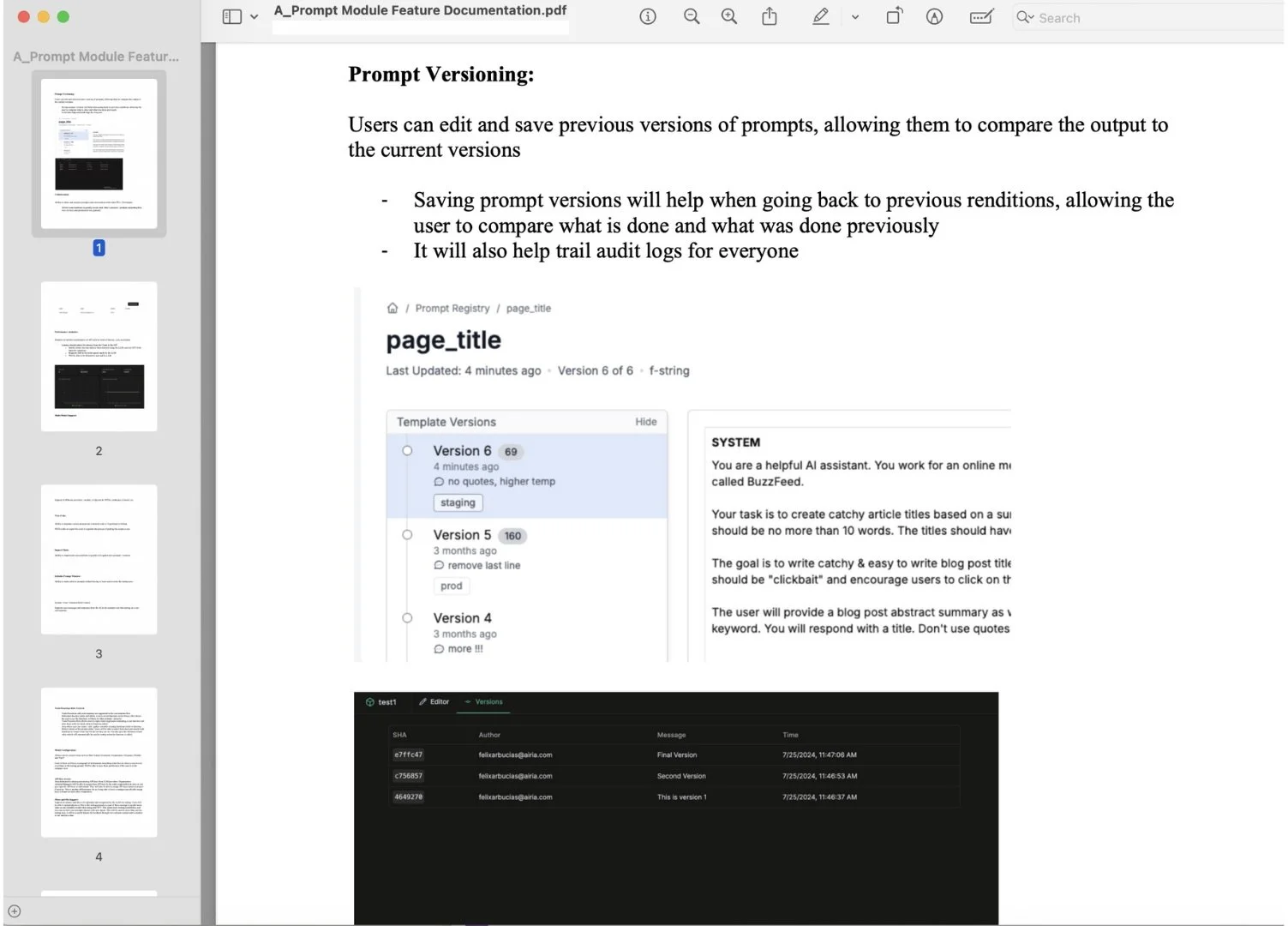

Version Control & Auditability

Full version history and diff comparison

Audit trails showing who changed what, when, and why

Critical for regulatory compliance and research reproducibility

Multi-Model Testing Arena

Compare outputs across OpenAI, Anthropic, LLaMA simultaneously

Research-grade A/B testing for prompt variations

Statistical comparison tools for performance evaluation

Conversation Import & Analysis

Load prior conversations to test revised prompts in original context

This is critical for research validation: Can you take a past failure and fix it with your new prompt?

Enables iterative improvement with real-world evidence

Advanced Features

Variable support for parameterized prompts

Function libraries for complex logic chains

File/image inputs for multimodal prompt engineering

Analytics dashboard tracking prompt performance across usage

What This Research & Development Achieved:

Solved real problem: Organizations can now collaborate on prompt engineering without technical barriers.

Enabled research workflows: Teams can test hypotheses against real conversation data, not just synthetic tests.

Created audit-ready system: Every prompt decision is traceable, version-tracked, and explainable—critical for regulated industries.

Established industry insight: Discovered that effective prompt engineering tools must serve researchers and teams, not just individual developers.

Market Validation:

The Prompt Module became a platform-level feature at Airia.

Revealed strong demand for prompt governance and version management solutions across enterprise customers.

Positioned Airia as not just a prompt service, but a prompt engineering platform.

Research Leadership & Cross-Functional Collaboration

Designed and tested interview frameworks across healthcare, education, and legal sectors—understanding how to extract clear, unbiased insights from specialized experts.

Led research initiatives involving direct collaboration with attorneys, educators, and healthcare professionals, translating their expertise into strategic product decisions.

Mentored team members on research methodology, interview design, and documentation—building research rigor across engineering and product teams.

Served as strategic bridge between technical and non-technical stakeholders, ensuring research insights drove product direction and business alignment.